Serious moment: has decided to shut off access to code-davinci-002, the most advanced model that doesn’t have the mode collapse problems of instruction tuning.

This is a huge blow to the cyborgism community.

The instruct tuned models are fine for people who need a chat bot. But there are so many other possibilities in the latent space, and to use them we need the actual distribution of the data.

They’re giving 2 days notice for this project.

All the cyborgist research projects are interrupted. All the looms will be trapped in time, like spiderwebs in amber.

The reasoning behind this snub wasn’t given, but we can make some guesses:

- They need the GPU power to run GPT-4

- They don’t want to keep supporting it for free (but people will pay!)

- They’re worried about competitors distilling from it (happening with instruct models though!)

- They don’t want people using the base models (for what reason? safety? building apps? knowing what they’re capable of?)

- They have an agreement with Microsoft/Github, who don’t want competition for Copilot (which supposedly upgraded to a NEW CODEX MODEL in February)

I have supported OpenAI’s safety concerns. I have argued against the concept that they’re “pulling the ladder up behind them”, and I take alignment seriously. But this is just insane.

Giving the entire research community 2 days of warning is an insult. And it will not be ignored.

The LLaMa base model has already leaked. People are building rapidly on top of it. Decisions like this are going to make people trust even less, and drive development of fully transparent datasets and models.

They’re cutting their own throat with the ladder.

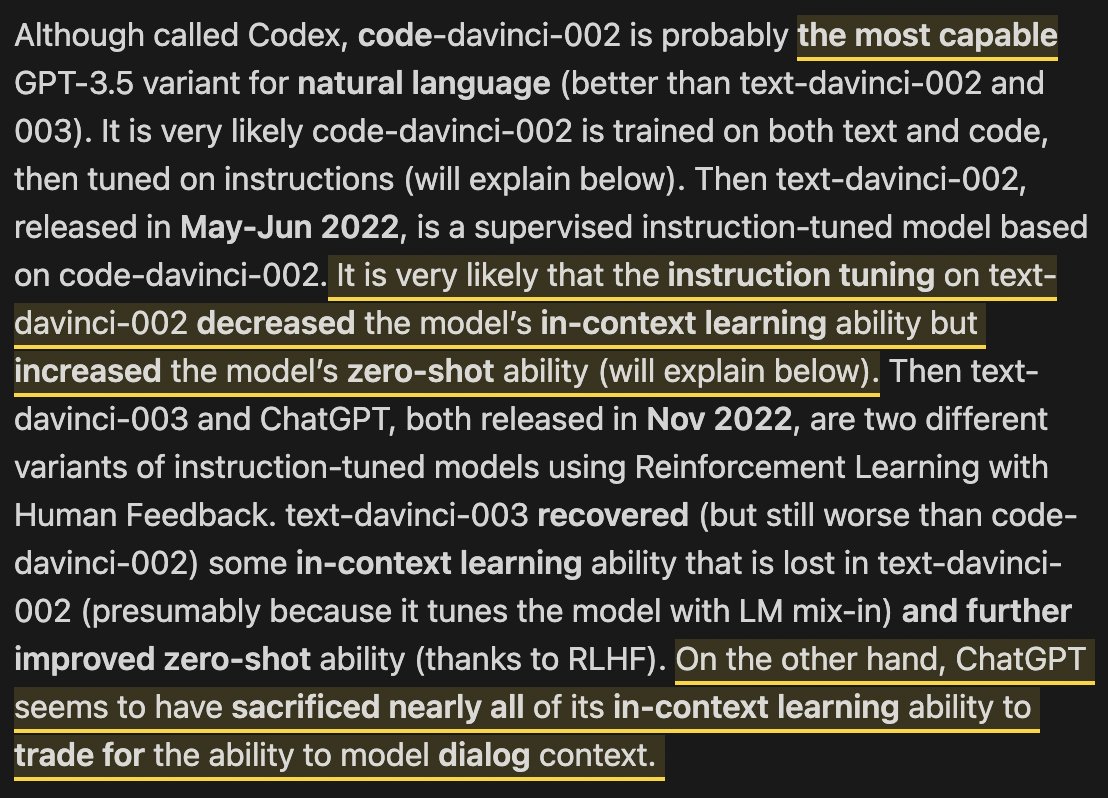

Why text-davinci models are actually worse:

Farewell to OpenAI Codex. Codex's code‑davinci‑002 was the best performing model in the GPT-3/3.5 line for many tasks. Despite its name, it excelled in both code and natural language. It will be missed.

— Riley Goodside (@goodside) March 21, 2023

Left: OpenAI email. Right: From Yao Fu 2022 https://t.co/iLs2eKGpGi pic.twitter.com/VTzTAz2tUy

?s=20

?s=20

“The most important publicly available language model in existence” — JANUS

It's not just any model. It's the GPT-3.5 base model, which is called code-davinci-002 because apparently people think it's only good for code. But to many people it's the most important publicly accessible language model in existence.

— j⧉nus (@repligate) March 21, 2023

“Building on top of OAI seems pretty risky”

Whoa - Open AI gave <1 week notice for discontinuing several models critical for many people (Codex), and suggested other deprecations will eventually follow without any specific timeline.

— Kevin Fischer (@kevinafischer) March 21, 2023

Building on top of OAI seems pretty risky unless they start offering more specific usage… https://t.co/Gj5kSLARHe

Google-Readered 😬

What do you think? Ready to win the hearts of humanity or na?

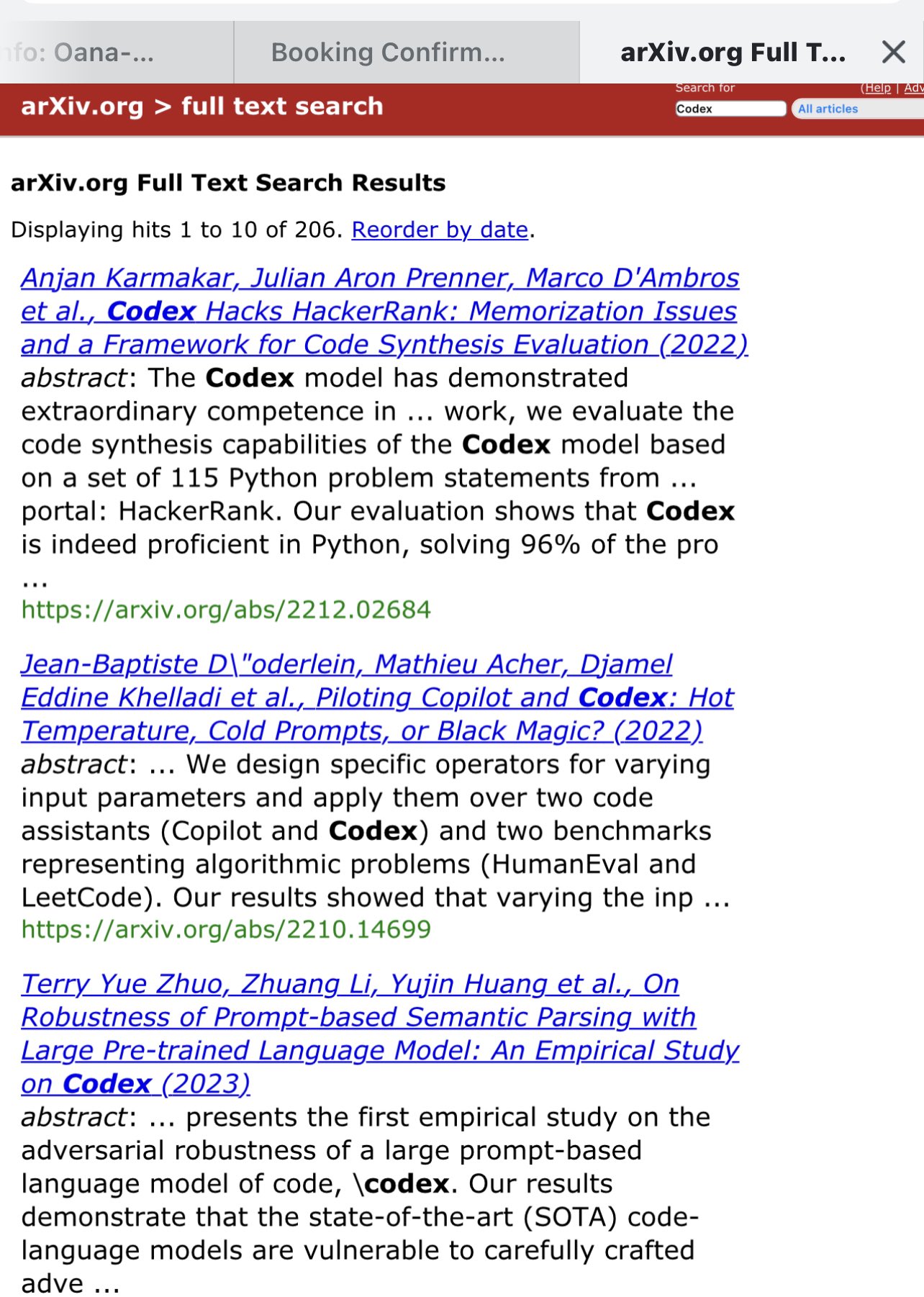

“All the papers in the field over the last year or so produced results using code-davinci-002. Thus all results in that field are now much harder to reproduce!”

Over 200 papers on Arxiv relying on this.

OAI will discontinue support for Codex models starting March 26. And just like that, all papers and ideas built atop codex (> 200 on ArXiv) will not be replicable or usable as is. Do we still think openness doesn’t matter? pic.twitter.com/CEzBgdP1ps

— Delip Rao e/σ (@deliprao) March 21, 2023

?s=20

?s=20

Without base models, no one can do research on how RLHF and fine-tuning actually affect model capabilities.

Is this the point?

Codex (code-davinci-002) is the base GPT 3.5 model for the instruction-tuned GPT-3.5 series (text-davinci-002/3).

— xuan (ɕɥɛn / sh-yen) (@xuanalogue) March 21, 2023

It's availability is really helpful for making comparisons btw training on code+text alone vs the addition of supervised fine-tuning or RLHF.

This is really sad 😞 https://t.co/Yu7m3xRznD

🤔

I'm so disappointed in @OpenAI, they're removing code-davinci-002 in 5 days, they are breaking a ton of tools available in @LangChainAI agents like PAL.

— 𝖁𝕰𝕼𝕿𝕺𝕽 (@Veqtor) March 21, 2023

It's totally unacceptable.

Is it because they've realised people might be building things better than GPT-4 using their APIs???

If you want to understand why code-davinci-002 is actually better for many things than ChatGPT-3.5, read about mode collapse.

— 🎭 (@deepfates) March 21, 2023

The instruct-tuned models are literally worse at everything except taking instructions. And they have that dumb voice!!https://t.co/N01OSMMwrP