So basically I trained a bunch of video fine-tunes on different movies and then I made multidimensional Netflix where you can see how each movie would interpret a given prompt.

You can fine-tune video models now on Replicate. But what can they do? We built Repflix to answer that question.

— Replicate (@replicate) January 29, 2025

Each video is generated using a different fine-tuned model, showing how the same prompt can produce different styles and interpretations.

Try it at the link below: pic.twitter.com/An6q3IWalM

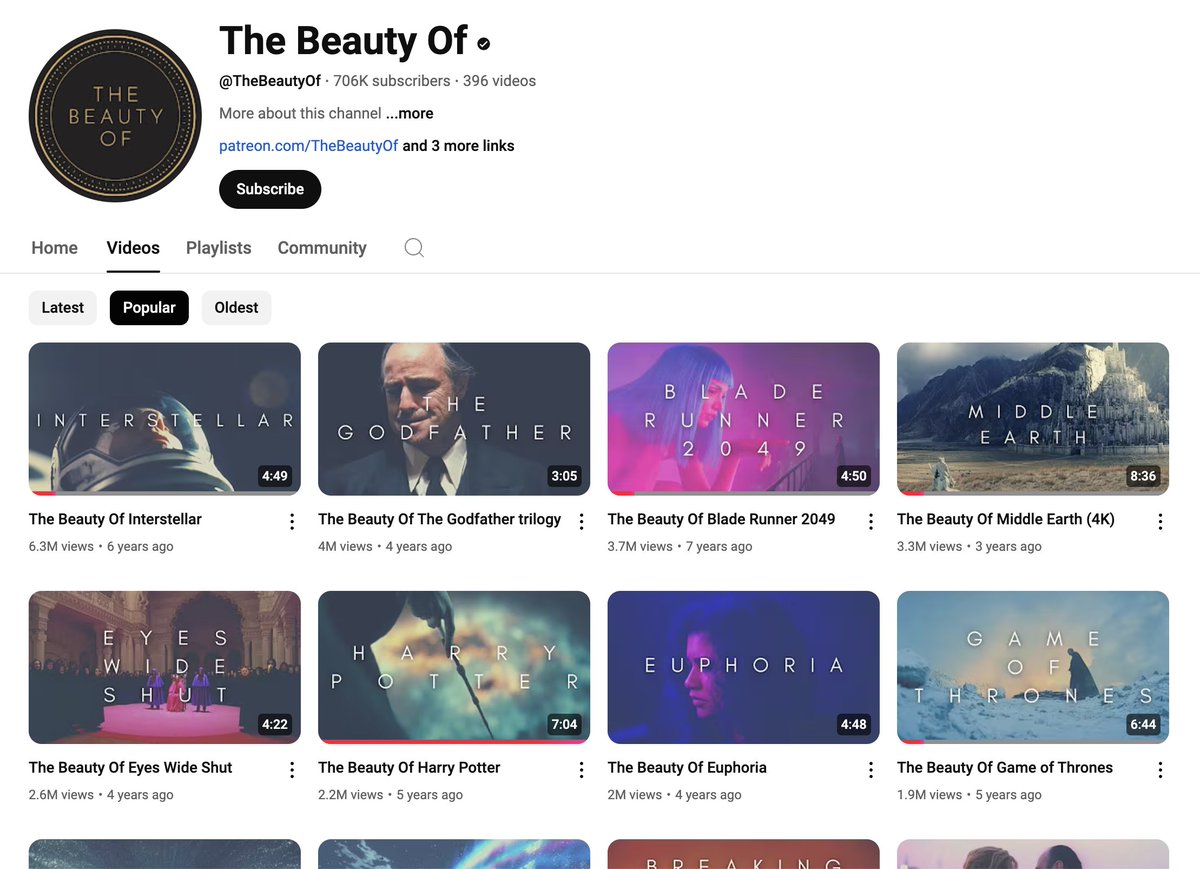

There’s this YouTube channel called “The Beauty Of” which just does supercuts of the best scenes in various movies. This is cool because I didn’t want to train on a whole movie (that would take a long time). This gives me the key shots that show what makes that movie unique.

I grabbed these with ‘s create-video-dataset model which scrapes YouTube, cuts the video into segments, and automatically captions each clip using Qwen2-VL-7B-Instruct, a multimodal model that can understand videos.

Then I uploaded those datasets to the HunyuanVideo LoRA trainer, which is a modified version of ‘s Musubi trainer. I tested a couple times first and found 2 epochs was not enough, but 8 epochs got a nice solid style transfer.

Depending on the number of clips, an 8 epoch training at batch size 8 takes like 30-60 minutes to compute.

That’s like $3-$6 since it runs on an H100. Not bad, but it helps to experiment with smaller runs first.

You end up with your own Replicate model: https://replicate.com/deepfates/hunyuan-spider-man-into-the-spider-verse

If you put in your token at training time, your model will also be saved to your Hugging Face account. This is great if you want to download the LoRA weights and use them on your local setup, or on other inference providers. Open source, baby!

Once I had the models finished, I did a bunch of tests with them. You can see some of those in this thread, where I compare a few models at a time on different prompts.

It's been a lot of fun to experiment with this. @replicate is the only place where you can train on video clips, instead of just static images, so you can capture the cinematography of different styles, as you see here.

— 🎭 (@deepfates) January 24, 2025

See more models in the thread 👇 https://t.co/O1CBWtVD4g pic.twitter.com/HJmg2UbXs7

I also ran some parameter sweeps, where you keep the seed and prompt the same but alter one setting at a time, to see its effect. That’s what gave me the idea for Repflix. It’s an interactive way to test these, with over 2,000 pre-generated videos at different settings.

Then it was just about building this site to share them! The code for the site is open source, there’s a link at the bottom of the page. I’ll share the scripts for generating video grids soon!

Okay, these are still pretty rough, I’ll try to improve them soon to make it more flexible and better organized. One cool thing to note: you can just run these scripts with uv, you don’t need to install a Python env. The dependencies are right in the file!